Here, in chronological order, are ten of the year’s top blog posts — among the most popular and the most interesting.

On-device speech processing has multiple benefits: a reduction in latency, lowered bandwidth consumption, and increased availability in in-car units and other applications where Internet connectivity is intermittent. Learn how innovative training methods and model compression techniques combine with clever engineering to keep Alexa’s speech processing local.

The Prime Video app runs on 8,000 device types, such as gaming consoles, TVs, set-top boxes, and USB-powered streaming sticks, and updating the app poses a difficult trade-off between updatability and performance. Moving to WebAssembly (Wasm), a framework that allows code written in high-level languages to run efficiently on any device, helps resolve that trade-off, reducing the average frame times on a mid-range TV from 28 milliseconds to 18.

The quantile function is simply the inverse of the cumulative distribution function (if it exists). Its graph can be produced by flipping the cumulative distribution function's graph over. The quantile function, which takes a quantile (a percentage of a distribution) as input and outputs the value of a variable, can answer questions like “If I want to guarantee that 95% of my customers receive their orders within 24 hours, how much inventory do I need to keep on hand?” Statisticians usually use regression analysis to approximate it, but a new approximation method lets the user query the function at different points, to optimize the trade-offs between performance criteria.

In April, Amazon released a new dataset called MASSIVE, composed of one million labeled utterances spanning 51 (now, 52) languages, along with open-source code that provides examples of how to perform massively multilingual natural-language-understanding (NLU) modeling. By learning a shared data representation that spans languages, an NLU model can transfer knowledge from languages with abundant training data to those in which training data is scarce.

Every column of pixels in a digital image corresponds to a ray extending across a 2-D map of the field of view. The ray’s origin is the location of the camera on the map. Constructing a top-down “bird’s-eye” view of a scene on the basis of standard sideways-on photographs is an important problem for autonomous vehicles, which need to build maps of their immediate environments. At the International Conference on Robotics and Automation (ICRA), Amazon researchers and colleagues at the University of Surrey won the overall outstanding-paper award for a new approach to the problem that strongly improves on all existing methods on three different datasets.

Neural networks learn by memorizing particular input-output relations, which helps them map out a space of possibilities, and then forgetting the memorized details as training examples accumulate. Enforcing differential-privacy constraints usually results in some performance drop, but letting a network memorize a little bit of public data, then training it on private data, enables it to meet differential-privacy criteria while cutting the resulting error increase by 60%-70%.

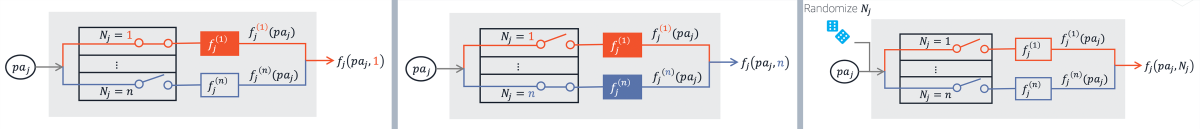

Amazon researchers introduce a definition of “root cause” that uses graphical causal models to formalize the quantitative causal contributions of root causes to observed outliers. To attribute an outlier to a variable, they ask the counterfactual question “Would the event not have been an outlier had the causal mechanism of that variable been normal?” They treat each unobserved noise variable as a random switch that selects a deterministic function from a set of functions, enabling them to replace a deterministic mechanism in the graph with normal mechanisms.

On the left, for the observed pair (xj, paj) of variable Xj and its parents PAj, the deterministic mechanism fj(1) of variable Xj is identified by the noise value (Nj = 1) corresponding to the pair (xj, paj). In the middle, a different value of noise (Nj = n) identifies a counterfactual deterministic mechanism fj(n). On the right, by drawing random samples of the noise term Nj according to some distribution, we assign “normal” deterministic mechanisms to Xj. Most large language models use decoder-only architectures, which work well for language modeling but are less effective for machine translation and text summarization. The 20-billion-parameter Alexa Teacher Model, which has an encoder-decoder architecture, outperforms language models more than 20 times its size on tasks such as few-shot article summarization and translation to and from low-resource languages.

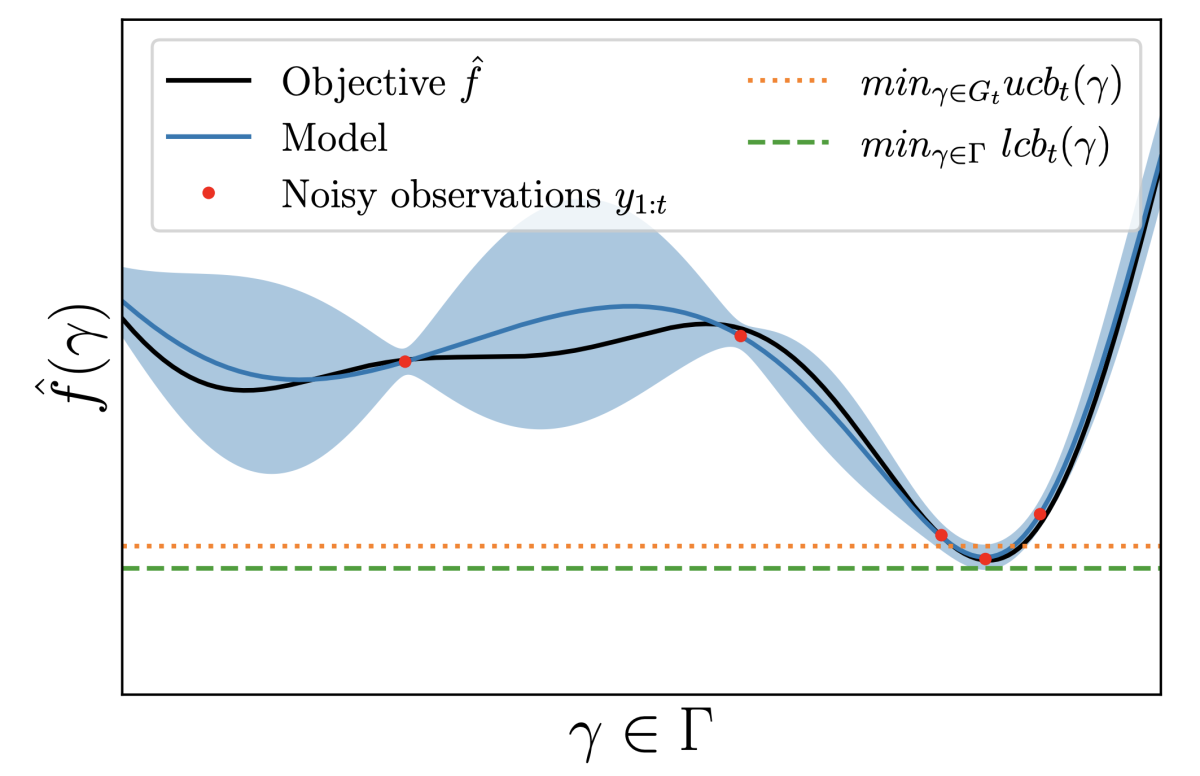

An example of Bayesian optimization, in which γ is a set of hyperparameter configurations and f-hat is an empirical estimate of the resulting model error. The gap between the green and orange lines is the estimate of the upper bound on the optimizer's regret, or the distance between the ideal hyperparameter configuration and the best configuration found by the optimization algorithm. A machine learning model’s hyperparameter configuration can dramatically affect performance. Hyperparameter optimization (HPO) algorithms search the configuration space as efficiently as possible, but at some point, the search has to be called off. A new stopping criterion offers a better trade-off between the time consumption of HPO and the accuracy of the resulting models. The criterion acknowledges that the generalization error, which is the true but unknown optimization objective, does not coincide with the empirical estimate optimized by HPO. As a consequence, optimizing the empirical estimate too strenuously may in fact be counterproductive.

From iterated SAT solvers to verifiable code, Amazon’s Byron Cook, Daniel Kröning, and Marijn Heule discuss the prospects for automated reasoning at Amazon.