Code generation — automatically translating natural-language specifications into computer code — is one of the most promising applications of large language models (LLMs). But the more complex the programming task, the more likely the LLM is to make errors.

Of course, the more complex the task, the more likely human coders are to make errors, too. That’s why debugging is an essential component of the software development pipeline. In a paper we presented at the 2024 Conference on Neural Information Processing Systems (NeurIPS), we describe a new way to train LLMs to be better debuggers while simultaneously improving code generation ability.

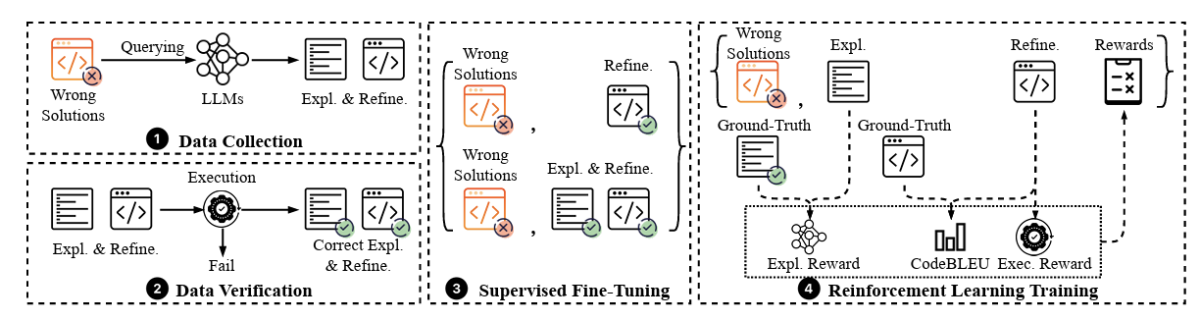

Previous attempts to debug code with LLMs have primarily used few-shot learning, where a few examples of successful debugs are provided, and the LLM infers the rest. In our work, by contrast, we use both supervised fine tuning (SFT) and reinforcement learning (RL) to specialize an LLM for debugging. Since debugging training data is scarce, we leveraged LLMs to create high-quality synthetic training data.

We conducted a series of experiments in which LLMs were given one attempt to generate code in response to a natural-language prompt and one further attempt to debug that code. Because our models had been fine-tuned on debugging data, their initial generations were more successful than those of an LLM relying solely on prompt engineering. But with both our models and the prompt-engineering baseline, debugging always resulted in better code performance.

To evaluate model performance, we used the pass@k metric, in which a model generates k implementations of a natural-language specification, and it’s accounted successful if at least one of those implementations passes a set of prespecified tests. In experiments with different code LLMs — including StarCoder-15B, CodeLlama-7B, and CodeLlama-13B — our approach improved pass@k scores by up to 39% on standard benchmark datasets such as MBPP.

Data synthesis

There are several widely used public datasets for training code generation models, which include natural-language prompts; canonical implementations of the prompts in code; and unit tests, specific sequences of inputs that can be used to test the full functional range of the generated code. But training data for debugging models is comparatively sparse.

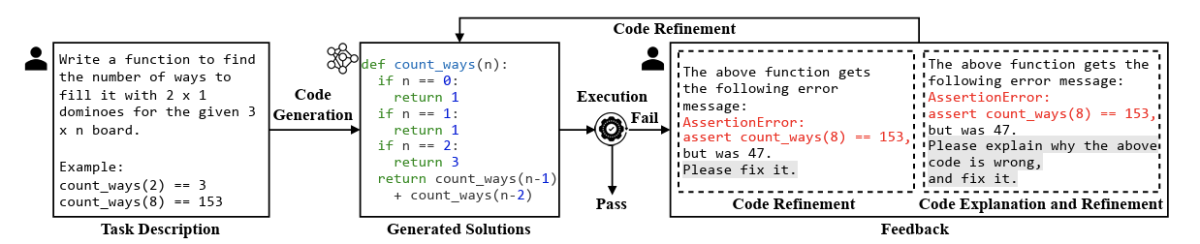

To create our debugging dataset, we begin with several of the existing code generation datasets. We repeatedly feed each natural-language prompt in those datasets to a code generation model, resulting in a number of different generations — say, 20 — for the same prompt. Then we run the relevant unit tests on those generations, keeping only the ones that fail the tests — that is, the buggy code.

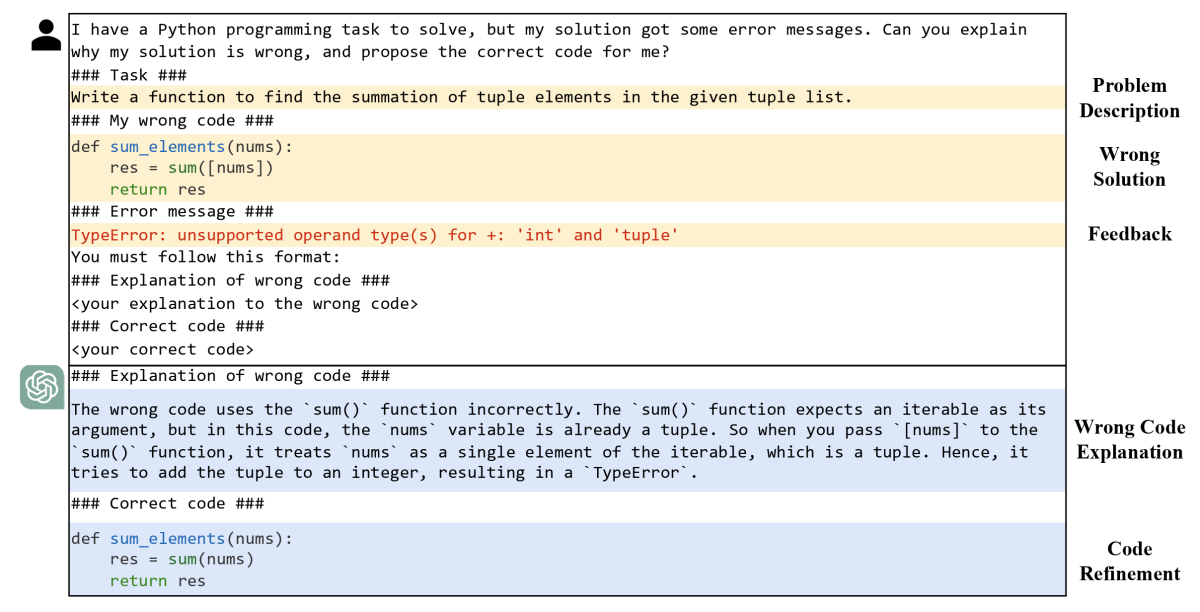

Next, we feed the buggy code to an LLM, together with the error messages it generated on the unit tests, and we prompt the LLM to explain where and why errors occurred. Finally, we take the LLM’s diagnosis and feed it, the buggy code, and the error messages back to the LLM, together with instructions to repair the bug. This is a version of chain-of-thought reasoning: prior work have shown that asking an LLM to explain the action it intends to take before it takes that action often improves performance.

We next execute the unit tests on the revised code, this time keeping only those revisions that pass all the tests. We now have a new dataset consisting of natural-language prompts; buggy implementations of those prompts; diagnoses of the bugs; debugged code; and unit tests.

Model updates

Armed with this dataset, we’re ready to update our debugging model, using both SFT and RL. With both update methods, we experimented with training regimens in which we asked for chain-of-thought explanations before asking for code revisions and those in which we simply asked for revisions.

With SFT, we prompted the model with the natural-language instructions, the buggy code, and the error messages from the unit tests. Model outputs were evaluated according to their performance on the unit tests.

With RL, the model interacts iteratively with the training data, attempting to learn a policy that will maximize a reward function. The classic RL learning algorithms require a continuous reward function, to enable exploration of the optimization landscape.

The unit test feedback is binary, hence discrete. To overcome this limitation, in addition to success rate on the unit tests, our RL reward function also includes the revised code’s CodeBLEU score, which measures its distance from the code of the canonical examples, providing a continuous reward signal.

Unit tests are time and resource intensive to apply, so training on CodeBLEU scores also opens the possibility of training directly on the canonical examples, a much more computationally efficient process. Our experiments indicate that this approach does improve debugging performance — though not as much as training on unit test results as well.

Evaluation

In our experiments, we used three types of models: one was a vanilla LLM that relied entirely on prompt engineering; one was an LLM updated on our dataset using SFT only; and one was an LLM updated on our dataset using both SFT and RL.

We implemented each type of model using three different LLM architectures, and for each class of model, we measured three sets of outputs: an initial generation; a direct revision of the initial generation; and a revision involving chain-of-thought reasoning. Finally, we also investigated two different generation paradigms: in one, a model was given one chance to generate correct code; in the other, it was given 10 chances. This gave us a total of 24 different comparisons.

Across the board, our updated models outperformed the prompt-engineering baselines. In all but one case, the version of our model updated through both SFT and RL outperformed the version updated through SFT only. Overall, we demonstrate a scalable way to use execution feedback and canonical examples to better debug code models and improve their generation performance.