Many of today’s most popular AI systems are, at their core, classifiers. They classify inputs into different categories: this image is a picture of a dog, not a cat; this audio signal is an instance of the word “Boston”, not the word “Seattle”; this sentence is a request to play a video, not a song.

But what happens if you need to add a new class to your classifier — if, say, someone releases a new type of automated household appliance that your smart-home system needs to be able to control?

The traditional approach to updating a classifier is to acquire a lot of training data for the new class, add it to all the data used to train the classifier initially, and train a new classifier on the combined data set. With today’s commercial AI systems, many of which were trained on millions of examples, this is a laborious process.

This week, at the 33rd conference of the Association for the Advancement of Artificial Intelligence (AAAI), my colleague Lingzhen Chen from the University of Trento and I are presenting a paper on techniques for updating a classifier using only training data for the new class.

As an example application, we consider a neural network that has been trained to identify people and organizations in online news articles. We show that it is possible to transfer that network and its learned parameters into a new network trained to identify an additional type of named entity — locations.

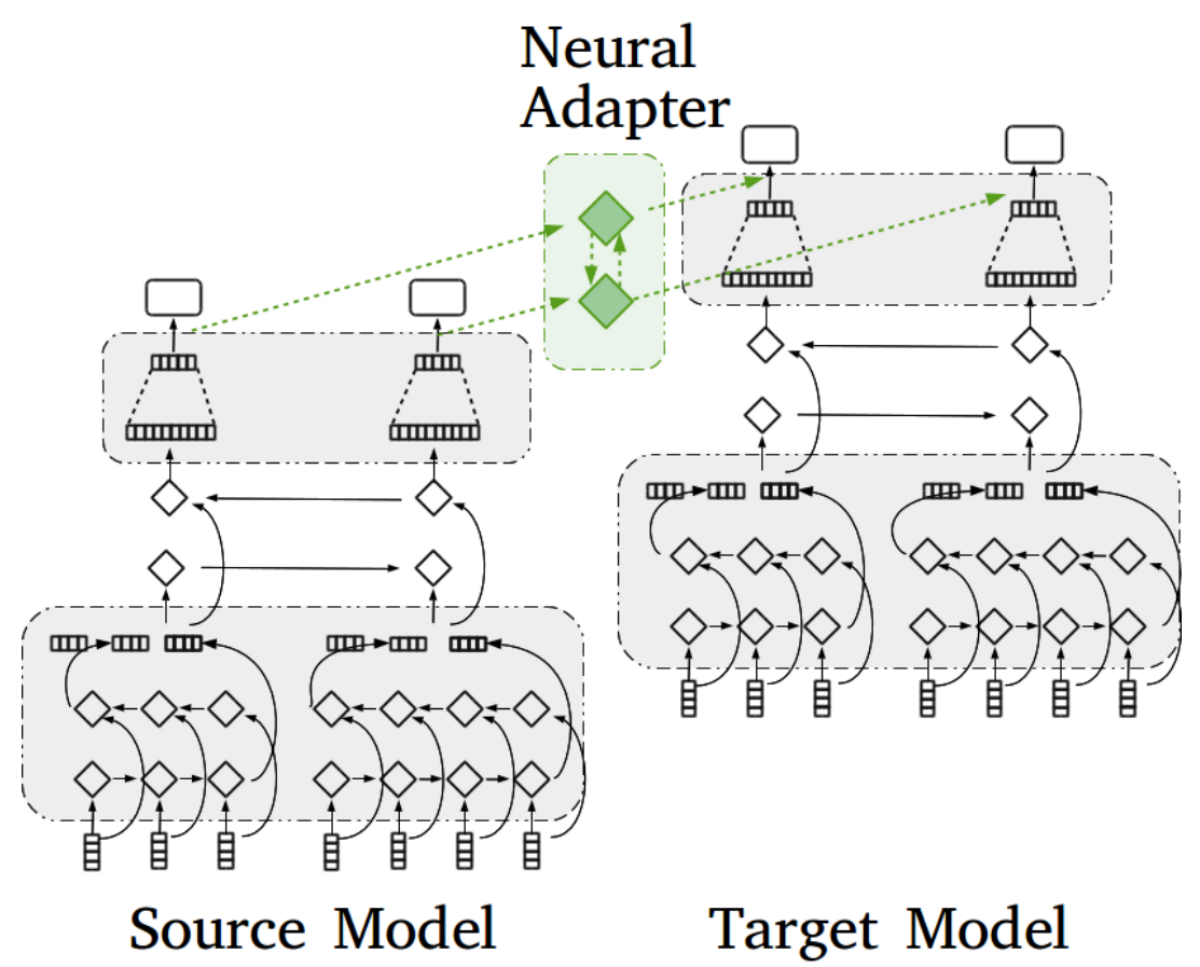

In particular, we found that the most effective technique is to keep the original classifier; pass its output through a separate network, which we call a neural adapter; and use the output of the neural adapter as an additional input to a second, parallel classifier, which is trained on just the data for the new class. The adapter and the new classifier are trained together, and at run time, the same input passes to both classifiers.

The problem of adapting an existing network to new classes of data is an interesting one in general, but it’s particularly important to Alexa. Alexa scientists and engineers have poured a great deal of effort into Alexa’s core functionality, but through the Alexa Skills Kit, we’ve also enabled third-party developers to build their own Alexa skills — 70,000 and counting. The type of adaptation — or “transfer learning” — that we study in the new paper would make it possible for third-party developers to make direct use of our in-house systems without requiring access to in-house training data.

Modeled loosely on the human neural system, neural nets are networks of simple but densely interconnected processing nodes. Typically, those nodes are arranged into layers, and the output of each layer passes to the layer above it. The connections between layers have associated “weights” that determine how much the output of one node contributes to the computation performed by the next, and training is a matter of adjusting those weights. Input data is fed into the bottom layer, and the output of the top layer indicates the likelihood that the input fits into any of the available classes.

For our initial (pre-adaptation) classifiers, we evaluated two different network architectures. One includes a layer known as a conditional random field just under the output layer, and the other does not. Before adaptation, the network with the conditional-random-field (CRF) layer slightly outperforms the one without (91.35% accuracy versus 91.06%).

The first transfer-learning method we examine is to simply expand the size of the trained network’s output layer and the layers immediately beneath it, to accommodate the addition of the new class. Then we retrain the network on just the new data. We then compare this approach to the one that uses the neural adapter. The output of the neural adapter joins the data flow of the new network just below the final layer (the CRF layer in one case, the final classification layer in the other).

For both initial architectures and both transfer-learning methods, we considered the case in which we allowed only the weights of the top few layers to vary during retraining and the case in which we allowed the weights of the entire network to vary. Across the board, allowing all the weights to vary offered the best performance.

The best-performing post-adaptation network was the one that used both the CRF layer and the neural adapter. With that architecture, the performance of the adapted network on only the original data fell off slightly, from 91.35% to 91.08%, but that was still the best figure across all architectures and adaptation methods. And the performance on the new data was almost as good, at 90.73% accuracy.

Acknowledgments: Lingzhen Chen