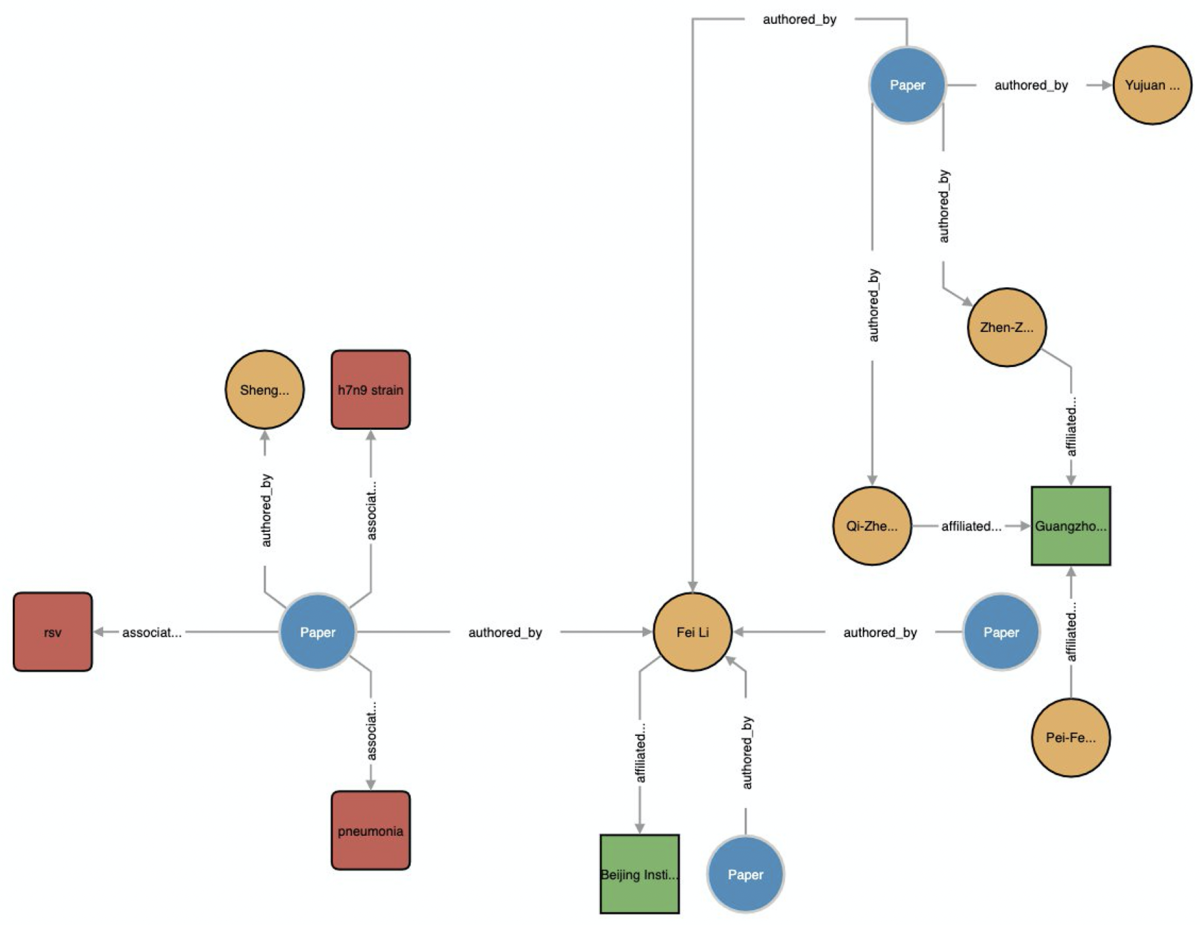

Knowledge graphs are a way of organizing information so that it can be more productively explored and analyzed. Like all graphs, they consist of nodes — usually depicted as circles — and edges — usually depicted as line segments connecting nodes. In a knowledge graph, the nodes typically represent entities, and the edges indicate relationships between them.

In May, Amazon Web Services (AWS) publicly released the COVID-19 Knowledge Graph (CKG), which organizes the information in the COVID-19 Open Research Dataset (CORD-19), a growing repository of academic publications about COVID-19 and related topics created by a consortium led by the Allen Institute for AI. CKG powers AWS’s CORD-19 ranking and recommendation system.

In a paper we presented earlier this month at the AACL-IJCNLP Workshop on Integrating Structured Knowledge and Neural Networks for NLP, we explain how we created CKG, and we describe several possible applications, including the ranking of papers on particular topics and the discovery of related papers.

How is the graph structured?

The graph has five types of nodes: paper nodes, containing metadata about the papers, such as titles and ID numbers; author nodes, containing authors’ names; institution nodes, containing institutions’ names and locations; concept nodes, containing specific medical terms that appear in papers, such as ibuprofen, heart disfunction, and asthma; and topic nodes, containing general areas of study such as genomics, epidemiology, and virology.

The graph also has five types of edges: authored_by, linking a paper to its authors; affiliated_with, linking authors to their institutions; associated_concept, linking a paper to its associated concepts; associated_topic, linking a paper to its topics; and citeps, linking a paper to other papers that cite it.

How was the graph created?

The standardized format of the papers in the CORD-19 database allows for easy extraction of title, abstract, body, authors and institutions, and citations.

To identify concepts, we rely on AWS Comprehend Medical, which extracts medical entities from the text and also classifies them into entity types. For instance, given the sentence “Abdominal ultrasound noted acute appendicitis”, AWS Comprehend Medical would extract the entities abdominal (anatomy), ultrasound (test treatment procedure), and acute appendicitis (medical condition).

To extract topics, we use an extension of latent Dirichlet allocation called Z-LDA, which is trained using the title, abstract, and body text from each paper. Z-LDA assumes that the terms most characteristic of a paper reflect some topic, and it selects one of those terms as the label for the topic based on frequency of occurrence across the corpus. A list of topics generated in this manner was whittled down to a final 10 topics with the help of medical professionals.

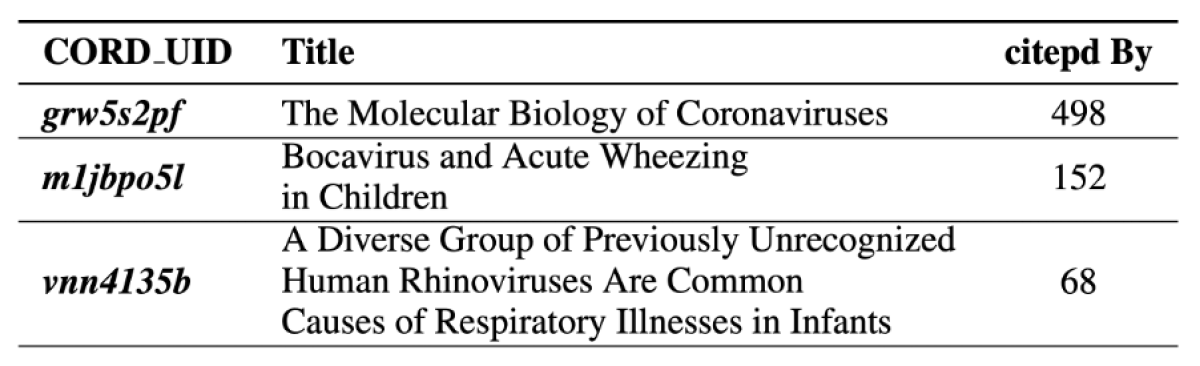

Example application: citation-based ranking

In academia, a standard measure of a paper’s relevance is the number of publications that cite it. A graph structure makes it easy to count citations. But it also enables customized counts, such as citations by publications that deal with a specific topic or include specific concepts.

Similar-paper engine

Given a paper, the similar-paper engine retrieves a list of k similar papers. It uses two different measures of similarity, which are combined in a final step.

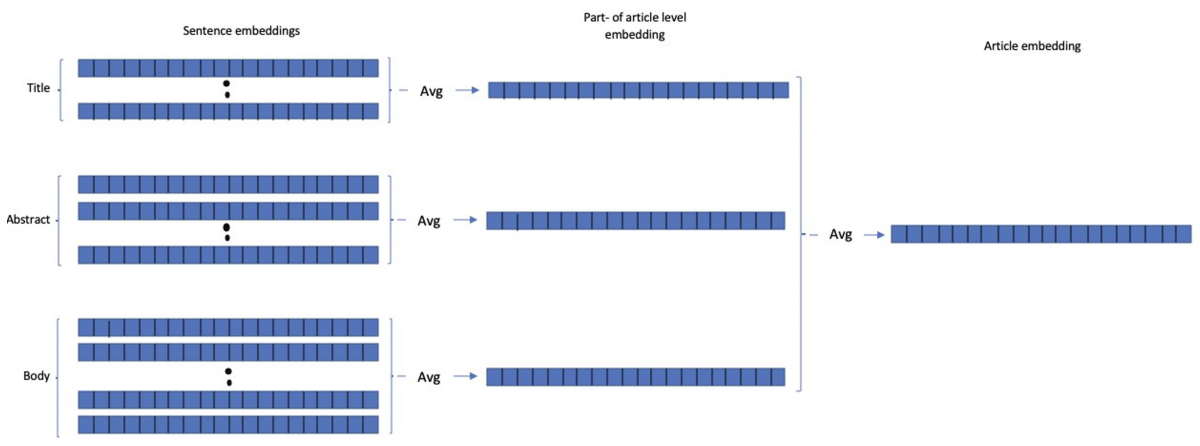

One measure uses SciBert embeddings, which are built on top of the popular BERT language model but fine-tuned on specifically scientific texts. SciBert represents input sentences as points in a multidimensional space, such that sentences relating to the same scientific concepts tend to cluster together.

We create separate embeddings for the title, abstract, and body of a paper and then average them to create a final embedding. Previous research suggests that title embeddings may be easier to distinguish from one another than body embeddings, while body embeddings carry richer information. So we chose an embedding scheme that gave both equal weight. The proximity in the representational space of the averaged embeddings indicates the similarity of the associated papers.

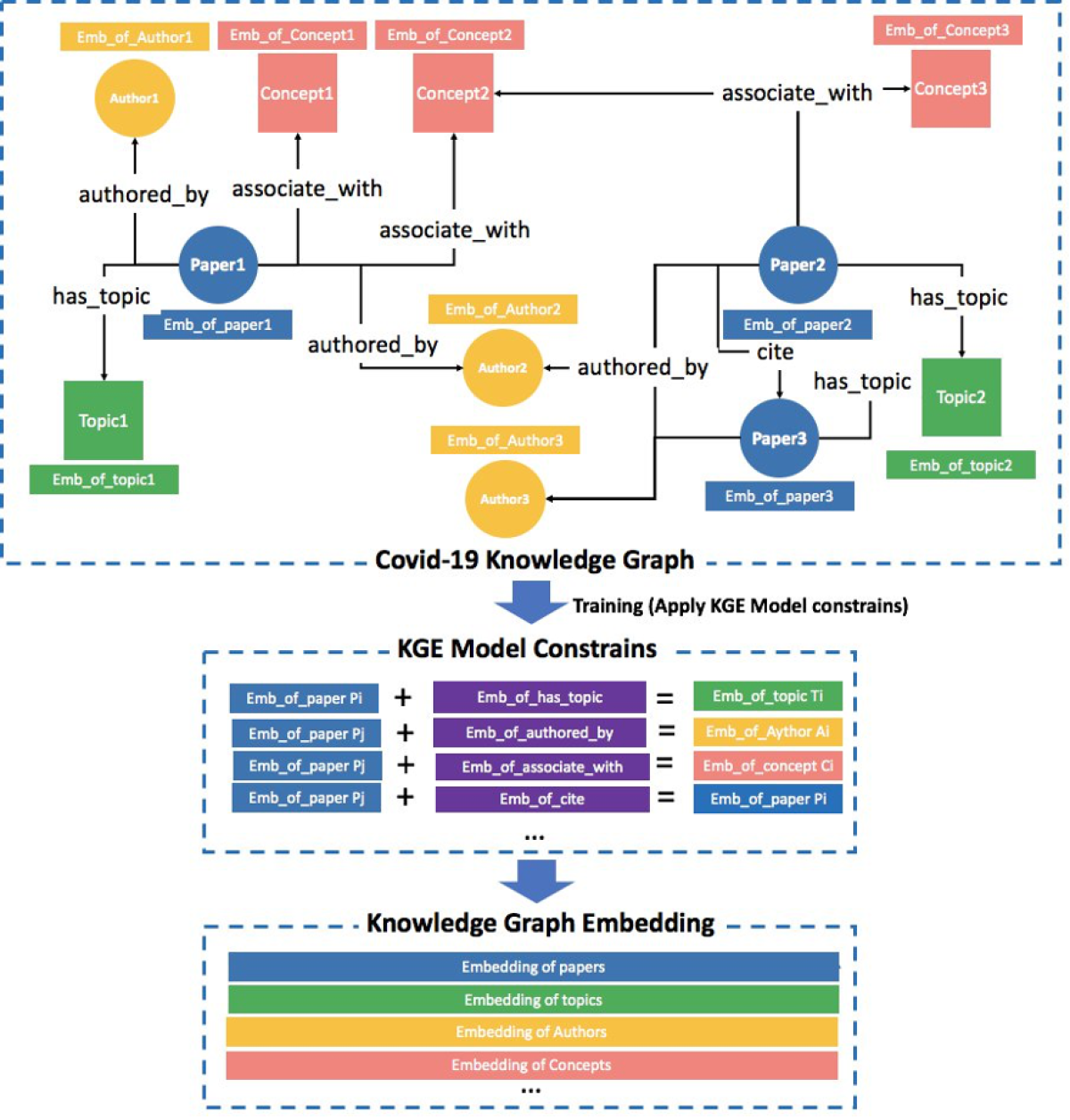

The second model uses a different kind of embedding, knowledge graph embeddings, which attempt to preserve relationships encoded in a knowledge graph. If two entities are connected in the graph by an edge representing a relationship, then the embedding of the first entity, when added to a vector representing the relationship, should produce a point in the vicinity (ideally, at the exact location) of the second entity.

To create our knowledge-graph-embedding network, we use the tool DGL-KE, which was developed at AWS and extends our earlier Deep Graph Library (DGL).

As training data, we extract sets of vector triplets (h, r, t) from CKG, where h is the head, r is the type of relation, and t is the tail. These triplets are the positive training examples. The negative examples are synthetically created by randomly replacing the head or tail of existing triplets.

Using these examples, we train our model to differentiate false links from real links. The result is an embedding for every node in the graph.

At the end of this process, we concatenate the semantic embeddings and knowledge graph embeddings, creating a new, higher-dimensional representational space. By computing the top-k closest vectors (cosine distance) in this space, we obtain the top-k most-similar papers.

Given the lack of ground truth for paper recommendations, we evaluate the algorithm through analytical quantitative and qualitative measures. These include but are not limited to popularity analysis, topic intersection between source paper and recommendations, low-dimension clustering, and abstract comparison.

Additional information about our approach can be found in a pair of posts on the AWS blog, "Exploring scientific research on COVID-19 with Amazon Neptune, Amazon Comprehend Medical, and the Tom Sawyer Graph Database Browser" and "Building and querying the AWS COVID-19 knowledge graph".

Acknowledgements: Xiang Song, Colby Wise, Vassilis N. Ioannidis, George Price, Ninad Kulkarni, Ryan Brand, Parminder Bhatia, George Karypis