Most machine learning models use supervised learning, meaning they’re trained on annotated data, which is costly and time consuming to acquire.

The chief method for doing unsupervised learning, which doesn’t require annotated data, is clustering, or grouping data points together by salient characteristics. The idea is that each cluster represents some category, such as photos of the same person or the same species of animal.

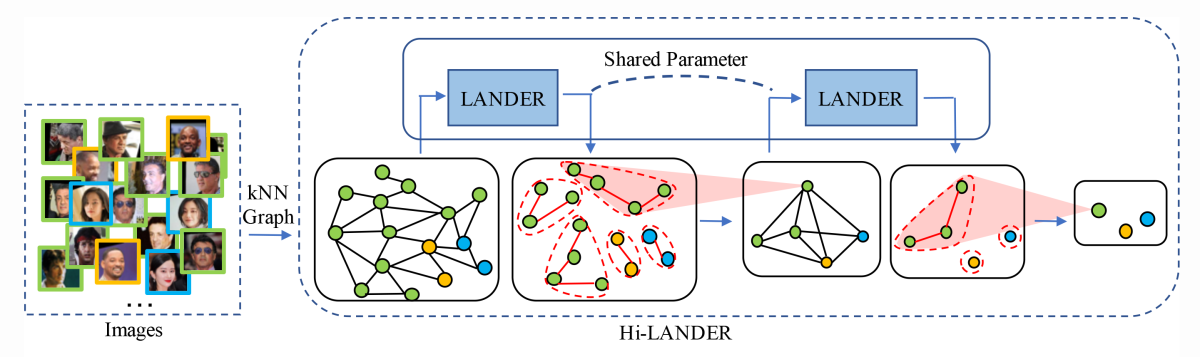

To decide where to draw boundaries between clusters, clustering algorithms typically rely on heuristics, such as a threshold distance between cluster centers or the shape of the clusters’ distributions. In a paper we’re presenting at the International Conference on Computer Vision (ICCV), we propose, instead, to learn from data how to draw boundaries.

We first represent visual data using a graph, then use a graph neural network (GNN) to produce vector representations of the graph’s nodes. So far, we follow on previous work.

Instead of relying on heuristics, however, we use labeled data to learn how to cluster the vectors and, crucially, to decide how fine-grained those clusters should be. We call the labeled data meta-training data, since the goal is to learn a general clustering technique, not a specific classification model.

In particular, we propose a hierarchical GNN, meaning that it creates clusters by adding edges between nodes of a graph, then adds edges between the clusters to create still larger clusters, and so on, iterating until it decides that no more edges should be added.

Finally, we apply our hierarchical clustering technique to test sets whose classification categories are disjoint with those of the meta-training data. In our experiments we found that, compared to previous GNN-based supervised and unsupervised approaches, ours increased the F-score — which factors in both false positives and false negatives — by an average of 49% and 47%, respectively.

Constructing the graph

In our paper, we investigate the case in which we are training a model to cluster visual data that is similar to the meta-training data but has no class overlaps with it. For instance, the meta-training data might be faces of movie stars, while the target application is to cluster faces of politicians, athletes, or other public figures.

The first step in our process is to use the meta-training data to build a supervised classifier: if the meta-training data is faces of movie stars, the classifier labels input images with names of movie stars.

The classifier is an encoder-decoder model: the encoder produces a fixed-length vector representation of the input, or feature vector, and the decoder uses that vector to predict a label. Once we’ve trained the classifier, however, we use only the encoder for the rest of the process.

The feature vectors define points in a multidimensional space. On the basis of the vectors’ locations, we construct a graph, in which each node represents an image, and each image’s k nearest neighbors in the feature space are connected to it (share edges with it) in the graph.

This graph will serve as the input to the clustering model, which is also an encoder-decoder model. The encoder is a GNN, which produces a vector representation of each node in the graph, based on that node’s feature vector and those of the nodes it’s connected to. Call this vector the node embedding.

The clustering model

We adopt a hierarchical approach to clustering. Based on the node embeddings, the clustering model predicts edges between nodes. A cluster is defined as a group of nodes each of which shares an edge with at least one other node in the group and none of which shares an edge with any node outside the group.

Note that the goal of the clustering model is not just to reproduce the nearest-neighbor graph but to link nodes that represent data of the same type. The nearest-neighbor linkages are useful for predicting clustering linkages, but they are not identical with them.

After the first pass through the data, we aggregate each cluster into a single, representative “supernode” and repeat the whole process. That is, we create edges between each supernode and its k nearest neighbors, pass the resulting graph through the same GNN, and predict edges based on the supernode embeddings. We repeat this process until the clustering model predicts no edges between nodes.

We train our clustering model on two different objectives. One is to correctly predict links between nodes, where a correct link is one that picks out two representatives of the same data type in the meta-training data (say, two photos of the same actor).

We also train the model to correctly predict the density of a given data type in a given graph neighborhood. That is, for each node, the model should predict the proportion of nearby neighbors of the same data type.

Past research on clustering has shown that factoring in data density improves results. Previously, however, link prediction and data density prediction were handled by separate models. By using a single model to jointly predict both, we significantly increase computational efficiency. We believe that the combination also contributes to our increase in accuracy.

The other novelty of our approach is that, because of our hierarchical processing scheme, we optimize clustering across the entire input graph. Previous approaches would first divide the graph into subgraphs, then perform inference within subgraphs. This prevents natural parallelization, which is runtime efficient, and limits the effectiveness of information flow through the graph. The full graph-wide processing is another reason for our model’s improved efficiency.

In experiments, we considered two different sets of meta-training data. One consisted of closeups of human faces, the other of images of particular animal species. We tested the model trained on human faces on two other datasets, whose data categories had zero or very little overlap with those of the meta-training set — 0% and less than 2%. We tested the model trained on animal species on a dataset of previously unseen species. Across both models and the three test sets, our average improvements over previous GNN-based clustering models and unsupervised clustering methods were 49% and 47%, respectively.

In ongoing work, we are investigating the possibility training a more general clustering model, whose performance at inference time will be more transferrable across different data types — accurately clustering both faces and animal species, for instance.

Acknowledgements: Tianjun Xiao, Yongxin Wang, Yuanjun Xiong, Wei Xia, David Wipf, Zhang Zheng, Stefano Soatto