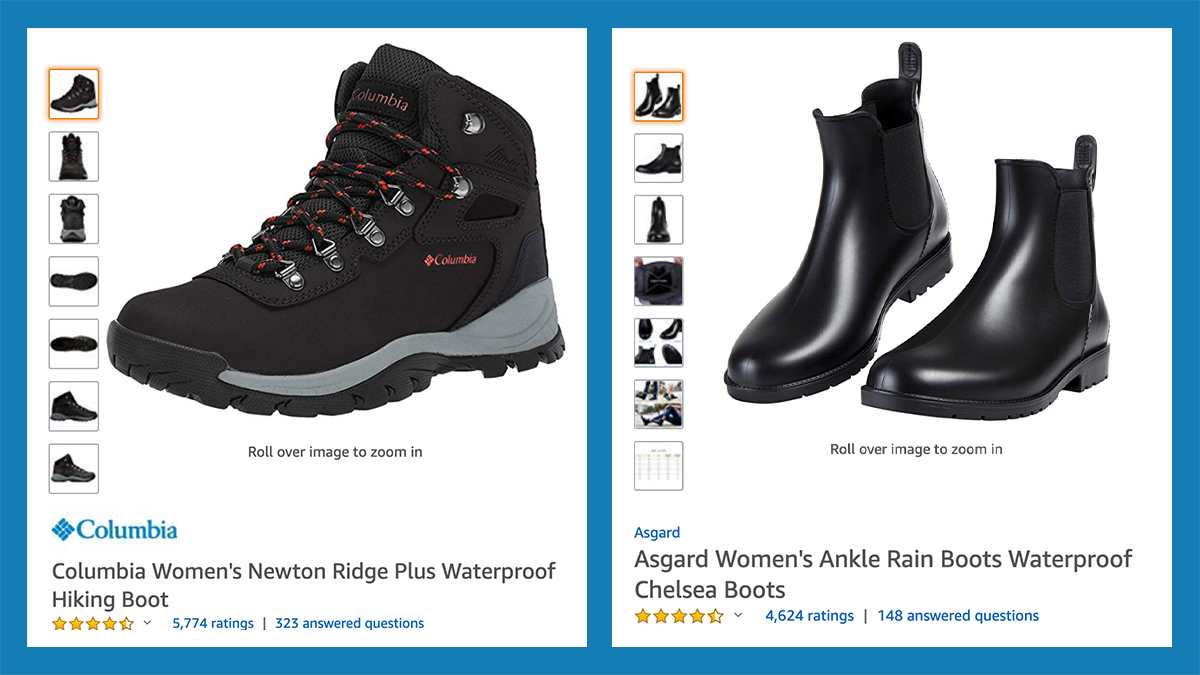

If an Amazon customer enters the query “waterproof shoes”, is she looking for office shoes that will keep her feet dry when she’s walking to the subway, or is she planning a weeklong hike in the Appalachians?

Most product discovery algorithms look for correlations between queries and products, but the best matches for a query could differ significantly depending on the context of use — such as “commuting” versus “hiking”.

In a paper accepted to the ACM SIGIR Conference on Human Information Interaction and Retrieval, my colleagues and I present a new neural-network-based system for predicting context of use from customer queries. From the query “adidas mens pants”, for instance, the system predicts the activity “running”.

In tests, human reviewers agreed, on average, with 81% of the system’s predictions, indicating that the system was identifying patterns that could improve the quality of Amazon’s product discovery algorithms.

The first step in training our system: assemble a list of context-of-use categories. These were identified by human experts, based on common product queries. We ended up with a list of 173 categories, divided into 112 activities (such as reading, cleaning, and running) and 61 audiences (such as child, daughter, man, and professional).

This was the extent of human involvement in annotating the training data for our system. We automated the rest of the data preparation process.

Building the data set

First, we used standard reference texts to create “aliases” for the terms we used to denote our categories. The aliases for the audience category mother, for instance, included “mum”, “mom”, and “mommy”.

The next stages of the process relied on an in-house Amazon data set, which relates millions of products to particular query strings.

We scoured online reviews of the products for either our original category terms or their aliases. If either — original or alias — turned up in any review of a given product, the product was labeled with the corresponding category term. This was a simple binary classification: either the product was related to the category or it wasn’t. We didn’t weight the classifications according to the frequency with which terms occurred.

The internal data set we used correlates query strings with products according to an affinity score, from 1 to 15. A low score means a weak correlation, a high score a strong one. To train our context-of-use predictor, we created a data set each of whose entries consists of three data items: a query; a product ID, annotated with context-of-use categories; and the query-product affinity score. Each query might be paired with different products, and each product might be paired with different queries.

We used the resulting data set to train six different machine learning models, each of which used a convolutional neural network. First, we divided our data into two sets, one annotated according to activity, one according to audience. From each of these data sets, we constructed two more, one in which the minimum permissible affinity score was 15, one in which it was 8.

Multiple metrics

The models whose training data had an affinity threshold of 15 were trained using binary cross-entropy, which is commonly used to train binary classifiers and imposes particularly stiff penalties on incorrect classifications that get high confidence scores. With the data that had an affinity threshold of 8, we used two different loss functions. One was the standard binary cross-entropy. The other was B-weighted binary cross-entropy, which is much like ordinary cross-entropy but weights the penalty incurred by each data item according to its affinity score.

Six models resulted, each of which was trained to predict context of use on the basis of query strings. In tests, the most accurate models used ordinary binary cross-entropy with an affinity threshold of 8. They were able to predict product annotations with an accuracy of 97% for activity categories and 92% for audience categories.

We also presented human reviewers with rank-ordered lists of categories generated by the three activity models; the reviewers then indicated which of the classifications they agreed with. Again, the best-performing model used binary cross-entropy with an affinity threshold of 8. This is where we saw an average of 81% agreement between the system’s per-item predictions and the annotators’ judgments.

This suggests that the contexts of use identified by our system could help product discovery algorithms deliver more-relevant results, improving the customer experience. Moreover, the minimal human supervision required to produce training data means that our method could be expanded to new categories with relatively little effort.