In recent years, data representation has emerged as an important research topic within machine learning.

Natural-language-understanding (NLU) systems, for instance, rarely take raw text as inputs. Rather, they take embeddings, data representations that preserve semantic information about the text but present it in a consistent, formalized way. Using embeddings rather than raw text has been shown time and again to improve performance on particular NLU tasks.

At this year’s IEEE Spoken Language Technologies conference, my colleagues and I will present a new data representation scheme specifically geared to the types of NLU tasks performed by conversational AI agents like Alexa.

We test our scheme on the vital task of skill selection, or determining which Alexa skill among thousands should handle a given customer request. We find that our scheme cuts the skill selection error rate by 40%, which should help make customer interactions with Alexa more natural and satisfying.

In Alexa’s NLU systems, customer utterances undergo a series of increasingly fine-grained classifications. First, the utterance is classified by domain, or general subject area: Music is one popular domain, Weather another.

Then the utterance is classified by intent, or the action the customer wants performed. Within the Music domain, possible intents are PlayMusic, LoadPlaylist, SearchMusic, and so on.

Finally, individual words of the utterance are classified according to slot type. In the utterance “play ‘Nice for What’ by Drake,” “Nice for What” fills the slot SongName, and “Drake” fills the slot SongArtist.

Our new data representation scheme leverages the fact that domains, intents, and slots form a natural hierarchy. A set of slots, together with the words typically used to string them together, define an intent; similarly, a set of intents define a domain.

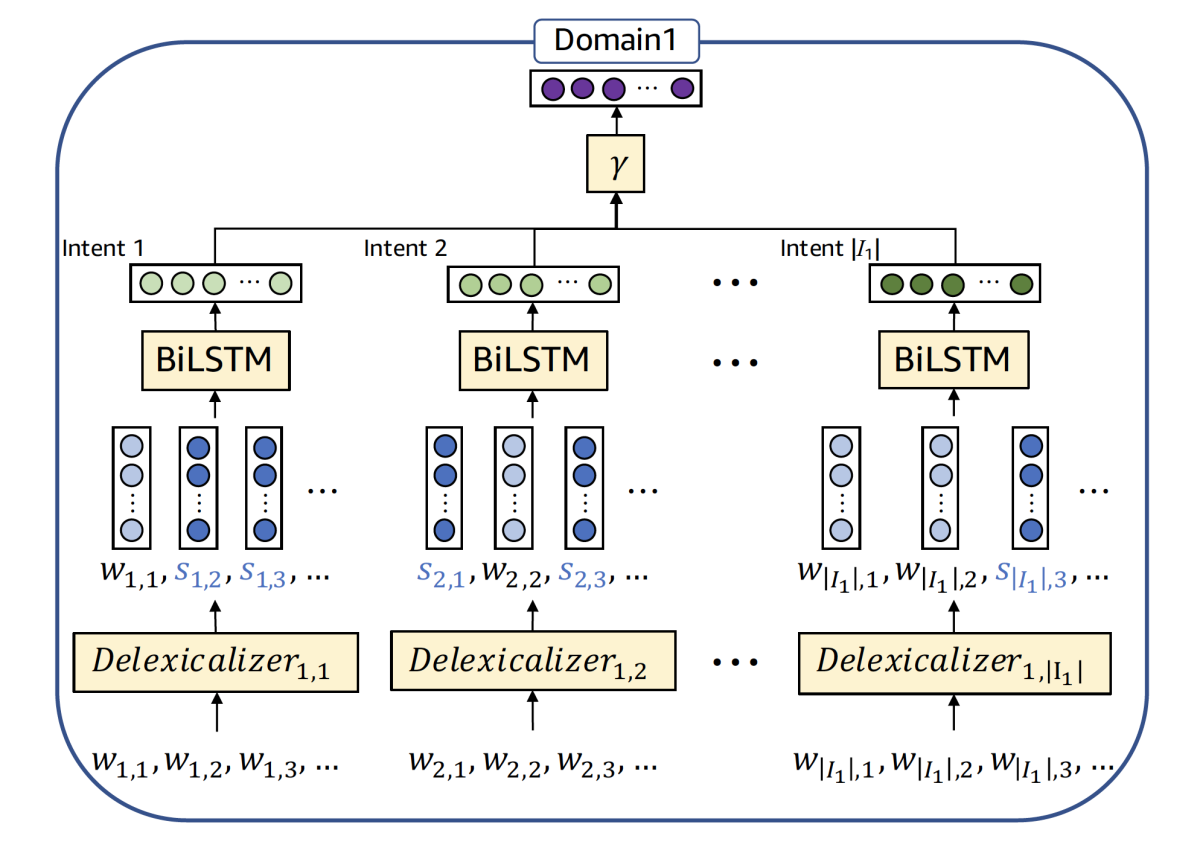

To produce our representation, we train a neural network on 246,000 utterances covering 17 domains. The network first produces a slot representation, then uses that to produce an intent representation, then uses that to produce a domain representation.

During training, the network is evaluated on how accurately it classifies domains — even though its purpose is not classification but representation. That evaluation criterion effectively enforces the representational hierarchy: it ensures that the slot and intent representations do not lose any information essential to domain representation.

Inputs to the network first pass through a “de-lexicalizer,” which substitutes generic slot names for particular slot-values. So, for instance, the utterance “play ‘Nice for What’ by Drake” becomes “play SongName by SongArtist”. Note that the network does not need to perform the slot classification itself; that’s handled by a separate NLU system. Again, the purpose of this network is to learn the best way to represent classifications, not to do the classifying.

The de-lexicalized utterance then passes to an embedding layer, which employs an off-the-shelf embedding network. That network converts words into fixed-length vectors — strings of numbers, like spatial coordinates, but in a high-dimensional space — such that words with similar meanings are clustered together.

Specific words that make it through the de-lexicalization process — such as “play” and “by” — are simply embedded by the network in the standard way. But slot names are handled a little differently. Before we begin training our representation network, our algorithms comb through the training data to identify all the possible values that each slot can take on. The WeatherCondition slot in the Weather domain, for instance, might take on values such as “storm”, “rain”, “snow”, or “blizzard”.

We then embed each of those words and average the embeddings. Since embedded words with similar meanings are near each other in the representational space, averaging the embeddings of several related words captures their general vicinity in the space. Prior to training, de-lexicalized slots are simply embedded as the averages of their possible values.

The training process modifies the settings of the embedding network, tuning it to the specific characteristics of the slots, intents, and domains represented in the training data. But the basic principle — grouping vectors by meaning — persists.

The embeddings for a de-lexicalized utterance then pass to a bidirectional long-short-term-memory network. Long short-term memories (LSTMs) process sequential data in order, and a given output factors in the outputs that preceded it. LSTMs are widely used in NLU, because they can learn to interpret words in light of their positions in a sentence. A bidirectional LSTM (bi-LSTM) is one that processes the same sequence of inputs from front to back and back to front.

The output of the bi-LSTM is a vector, which serves as the intent representation. The intent vector then passes through a single network layer, which produces a domain representation.

To evaluate our representation scheme, we used its encodings as inputs to the two-stage skill-selection system that we previously reported. When using raw text as input, that system routes requests to a particular skill with 90% accuracy; our new representation boosts accuracy to 94%.

To show that the success of our representation depends on the hierarchical nesting of classification categories we also compared it to three different systems of our own design that produce representations by combining bi-LSTM encodings of de-lexicalized inputs with learned domain and intent embeddings. All three of those systems showed improvements over raw text, but none of them matched the performance of the hierarchical system.

Acknowledgments: Jihwan Lee, Dongchan Kim, Ruhi Sarikaya