In March 1989, strange things began to happen in the US and Canada. The Hydro-Quebec electric grid collapsed within 90 seconds. A strong electric current surged through the surface bedrock making all intervention impossible. Over 6 million people were left without power for nine hours. At the same time, over in the United States, 200 instances of power grid malfunctions were reported. More worryingly, the step-up transformer at the New Jersey Salem Nuclear Power Plant failed and was put out of commission.

These outages weren’t caused by an earthquake, a terrorist group or some other terrestrial event. Instead, the culprit was a massive solar coronal mass ejection (CME) that was 36 times the size of earth.

The sun emits flares in the form of heat and light on a regular basis. These flares reach the earth in about eight minutes and, while ongoing, they interrupt radio communications signals. The flares often come with a surge of high-energy solar particles, which can travel at 80 percent of the speed of light and reach our planet in a time range that can vary from 10 to 20 minutes.

Earth’s magnetic fields protect us against much of the sun’s activity — but in some circumstances that radiation can seep through our earth’s protective atmosphere. On occasion, solar flares burst forth along an eruption called a coronal mass ejection, or CME. CMEs are massive clouds of plasma and magnetic fields, and can (when accompanying magnetic fields are oriented in the correct direction) cause the magnetic fields around earth to begin oscillating like a cosmic gong. When solar coronal mass ejections collide with the earth’s magnetosphere, they can induce geomagnetic solar superstorms.

Superstorms such as the one in March 1989 are rare indeed, estimated to occur only once every 50 years. Experts who study extreme events, like super-volcanoes or asteroid impacts, frequently call these occurrences low frequency/high consequence events. NASA scientists are involved in understanding what turns an average solar storm into a superstorm, just as meteorologists have been able to understand how a tropical storm over the ocean turns into a hurricane. The more we understand about what causes such space weather, the more we can improve our ability to forecast and mitigate their effects.

The difficulty of predicting superstorms

However, predicting superstorms, and developing early response systems to these extreme events is a difficult endeavor. For one, given just how rare superstorms are, there are very few historical examples that can be used to train algorithms. This makes common machine learning approaches like supervised learning woefully inadequate for predicting superstorms. Additionally, with dozens of past and current satellites gathering space weather information from different key vantage points around Earth, the amount of data is prodigious — and the attempt to find correlations laborious when searched conventionally.

NASA is working with AWS Professional Services and the Amazon Machine Learning (ML) Solutions Lab to use unsupervised learning and anomaly detection to explore the extreme conditions associated with superstorms. The Amazon ML Solutions Lab is a program that enables AWS customers to connect with machine learning experts within Amazon.

With the power and speed of AWS, analyses to predict superstorms can be carried out by sifting through as many as 1,000 data sets at a time. NASA’s approach relies on classifying superstorms based on anomalies, rather than relying on an arbitrary range of magnetic indices. More specifically, NASA’s anomaly detection relies on simultaneous observations of solar wind drivers and responses in the magnetic fields around earth.

Superstorms can be modeled as anomalous outlier events. Anomaly score values and evolution can be used to identify patterns that distinguish superstorm events from other solar storms. The anomaly scores offer an alternative method of categorizing extreme events that does not rely on arbitrary ranges of geomagnetic indices but rather on features of the storms themselves.

Emergent features that can be used for anomaly detection include the particle density in our ionosphere – the lowest levels of space which overlap with our upper atmosphere. Most of the ionosphere is electrically neutral. However, solar radiation activity can cause electrons to be dislodged from atoms and molecules. As a result, particle density can increase by orders of magnitude on the sun-facing side of the planet during a superstorm. On the flip side, superstorms create a hole in the ionosphere on earth’s dusk side, as sunlight is not available to replenish the density of the ions after the ionosphere is lifted upward during the event.

Detecting anomalies with AWS

NASA uses Amazon SageMaker to train an anomaly detection model using the built-in AWS Random Cut Forest Algorithm (RCF) with heliophysics datasets compiled from various ground- and satellite-based instruments. Anomalies are easy to describe in that, when viewed in a plot, they are often easily distinguishable from the more typical data. With each data point, RCF associates an anomaly score. Low score values indicate that the data point is considered normal. High values indicate the presence of an anomaly in the data.

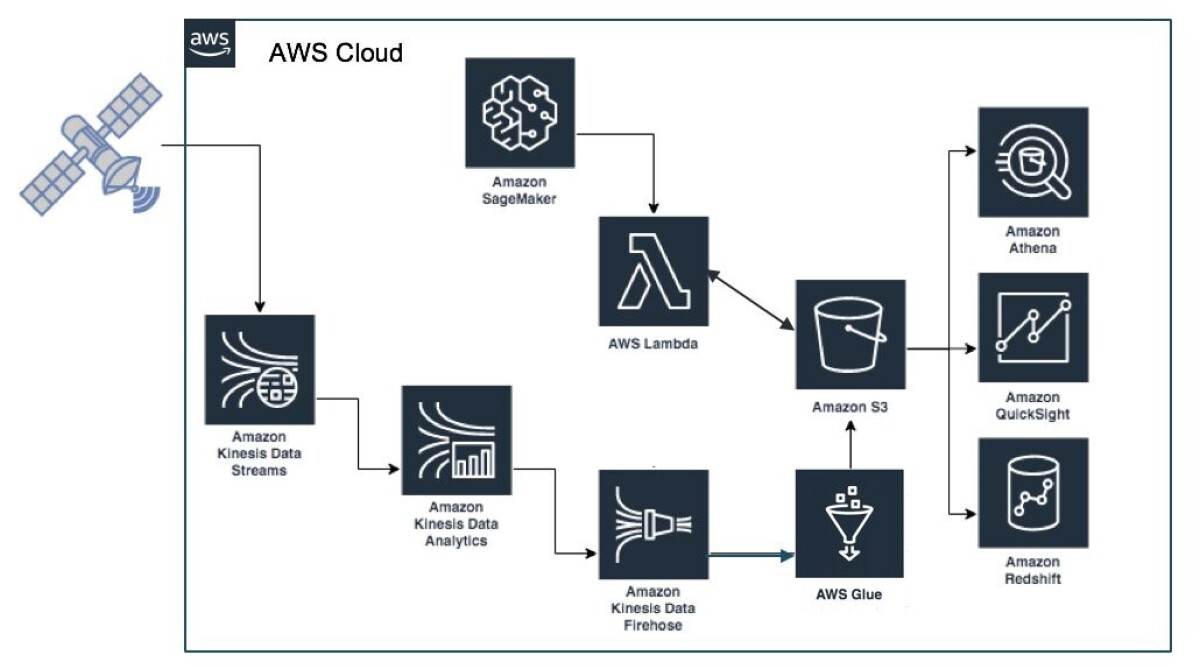

The Amazon ML Solutions Lab uses a serverless streaming data pipeline application to enable real-time monitoring. The pipeline processes in-situ observations from spacecraft to detect and alert on anomalies in real-time. The streaming pipeline leverages Amazon Kinesis Data Streams to determine anomaly scores.

Amazon Kinesis Firehose, can stream real-time data into Amazon S3 is used for record delivery and schema conversion to Parquet. In addition to real-time alerting, the model results are persistently stored in Amazon S3 where they can be further analyzed using Amazon SageMaker and visualized with Amazon QuickSight, which allows for the creation and publishing of interactive dashboards that include ML Insights.

With a robust set of anomalies to examine, researchers can search for what causes them and linkages between them. NASA and AWS are developing a centralized data lake which will allow researchers to access and analyze cosmic-scale data with dynamic cloud compute resources. To date, the initiative has aggregated observational data from 50+ satellite missions containing images, time-series and miscellaneous telemetry data. Data is continually pre-processed and combined to develop visualizations that drive future heliophysics research and innovation.

With Amazon, we can take every single piece of data that we have on superstorms, and use anomalies to improve the models that predict and classify superstorms effectively.

To improve forecasting models, scientists can examine the anomalies and create simulations of what it would take to reproduce the superstorms we see today. They can amplify these simulations to replicate the most extreme cases in historical records, enabling model development to highlight subtle precursors to major space weather events.

“We have to look at superstorms holistically, just like meteorologists do with extreme weather events,” says Janet Kozyra, a heliophysicist who leads this project from NASA headquarters in Washington, D.C.

“Research in heliophysics involves working with many instruments, often in different space or ground-based observatories. There’s a lot of data, and factors like time lags add to the complexity. With Amazon, we can take every single piece of data that we have on superstorms, and use anomalies we have detected to improve the models that predict and classify superstorms effectively."