Computer vision, the automatic recognition and description of images and video, has applications that are far-reaching, from identifying defects in high speed assembly lines and its use in autonomous robots, to the analysis of medical images, and the identification of products and people in social media. This week, in line with the IEEE Computer Vision and Pattern Recognition (CVPR) conference, we’ve rounded up examples of how some of AWS's most innovative customers are utilizing computer vision and pattern recognition technologies to improve business processes and customer experiences. This includes approaches such as data scientists building custom vision models using Amazon SageMaker, and application developers using Amazon Rekognition and Amazon Textract to embed computer vision into their applications.

Advertising

In advertising and other online media, computer vision can automate content moderation. REA Group, a multinational digital advertising company specializing in property and real estate, provides search-based portals that enable property sellers to upload images of properties on the market to deliver a wide, searchable selection to their consumers. REA Group discovered that images uploaded to their portal often weren’t compliant with their usage terms. Some images included trademarks or contact details of the sellers, which created lead attribution challenges. They set up a dedicated team of individuals to manually review the images for unapproved content, but the large volume of daily uploads and the additional review process delayed the property listing time by several days. The REA team developed an image compliance system that automatically detects any noncompliance and notifies sellers. To augment their existing machine learning models, they're using Amazon Rekognition Text in Image, which detects and extracts text in images, enabling them to increase the accuracy of detecting noncompliance and reduce false positives by more than 56 percent. They added business rules that factored in a variety of predictions from their own models, and from Amazon Rekognition, to enable automated decision-making.

Agriculture

Agriculture has also benefited from computer vision. Fish farming is one of the most efficient sources of protein, since a pound of feed equates to nearly a pound of protein. But the cold, dark waters of fish habitats make it nearly impossible to effectively manage these farms from the surface. Historically, fish farmers have had to randomly scoop fish out of the water to measure their weight and check for disease. Aquabyte’s machine learning solution reimagines this process by using underwater cameras that keep tabs on the fish and compare photos of them over time. The machine learning algorithms, running on Amazon SageMaker, can estimate how much each fish weighs while it’s still in the water. The system can also monitor the fish for sea lice, a parasite that is a major problem in salmon farms, and the subject of significant regulation in Norway, where the bulk of Aquabyte’s client base currently operates. Without a solution like Aquabyte, managing sea lice amounts to nearly a quarter of the cost of operating a salmon farm. Aquabyte’s cameras have counted 2 million sea lice to date, the result of billions of images being captured. The Aquabyte team has been working on methods that would allow farmers to track individual fish for growth-tracking and breeding purposes. In the future, machine learning might even help automate elements of the farms by intelligently distributing fish feed, for example.

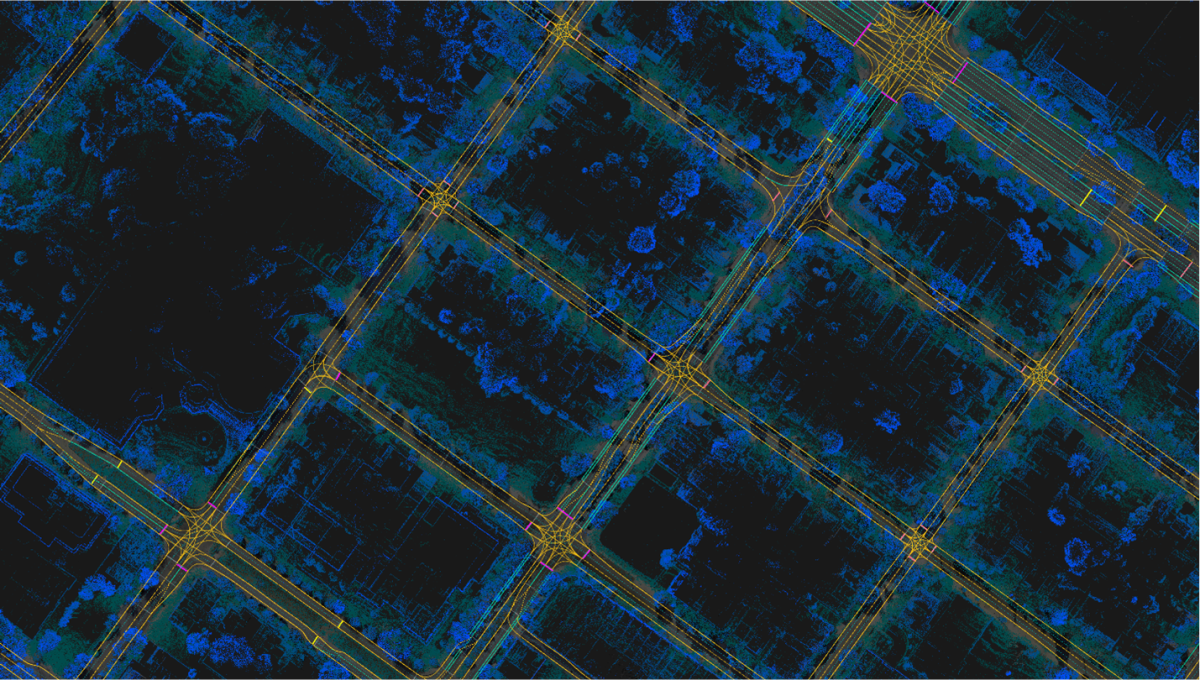

Autonomous driving

Industries like autonomous driving wouldn’t even be possible without the help of computer vision. Perhaps you think the world is already sufficiently mapped. With the advent of satellite images and Google Street View, it seems like every square inch of the globe is represented in data. But for autonomous vehicles, much of the world is uncharted territory. That’s because the maps designed for humans “can’t be consumed by robots,” says Tom Wang, the director of engineering at DeepMap, a Palo Alto startup focused on solving the mapping and localization challenge for autonomous vehicles. According to Wang, these new kinds of vehicles need higher precision maps with richer semantics, things like the traffic signals, a lot of different traffic signs, driving boundaries, and connecting lanes. For DeepMap computer vision is critical. DeepMap needs to run a vast volume of image detections to automatically generate a comprehensive list of map features and detect dynamic road changes. Using Amazon SageMaker, DeepMap updates training models within a day and runs image detection on tens of millions of images on a daily basis to keep up with ever-changing conditions.

Education

In the wake of the COVID-19 pandemic, many educational institutions needed to quickly pivot to the online proctoring of exams, leading to a need for new ways to verify identification. Certipass, a UNI ISO standards accredited body for the certification of digital skills, is the primary provider of the international digital competency certification –European Informatics Passport (EIPASS).

Since the EIPASS Certification is an international standard, Certipass has made it their mission to ensure maximum security, objectiveness, transparency, and fairness during the entire online evaluation process. Certipass used Amazon Rekognition for automated candidate identity verification during tests that are in line with e-Competence Framework for Information and Communication Technology (CEN) and The Digital Competence Framework for Citizens (Joint Research Centre). They were able to build the solution in under 30 days to enable all their testing centers to test candidates online during COVID-19.

Financial services

In financial services, Aella Credit provides easy access to credit in emerging markets using biometric, employer, and mobile phone data. For those in emerging markets, identity verification and validation is one of the major challenges to accessing retail banking services. How can you know that people are who they say they are in communities that don't have proper identification systems? Aella Credit uses Amazon Rekognition to analyze images to verify a customer’s identity and give them access to financial and healthcare services with minimal friction. Amazon Rekognition helps to automate video and image analysis, with no machine learning expertise required. What would have taken days to verify someone’s identity manually, now happens in seconds. Customers can actually receive their loan in their account in less than five minutes, broadening access to credit.

Financial technology

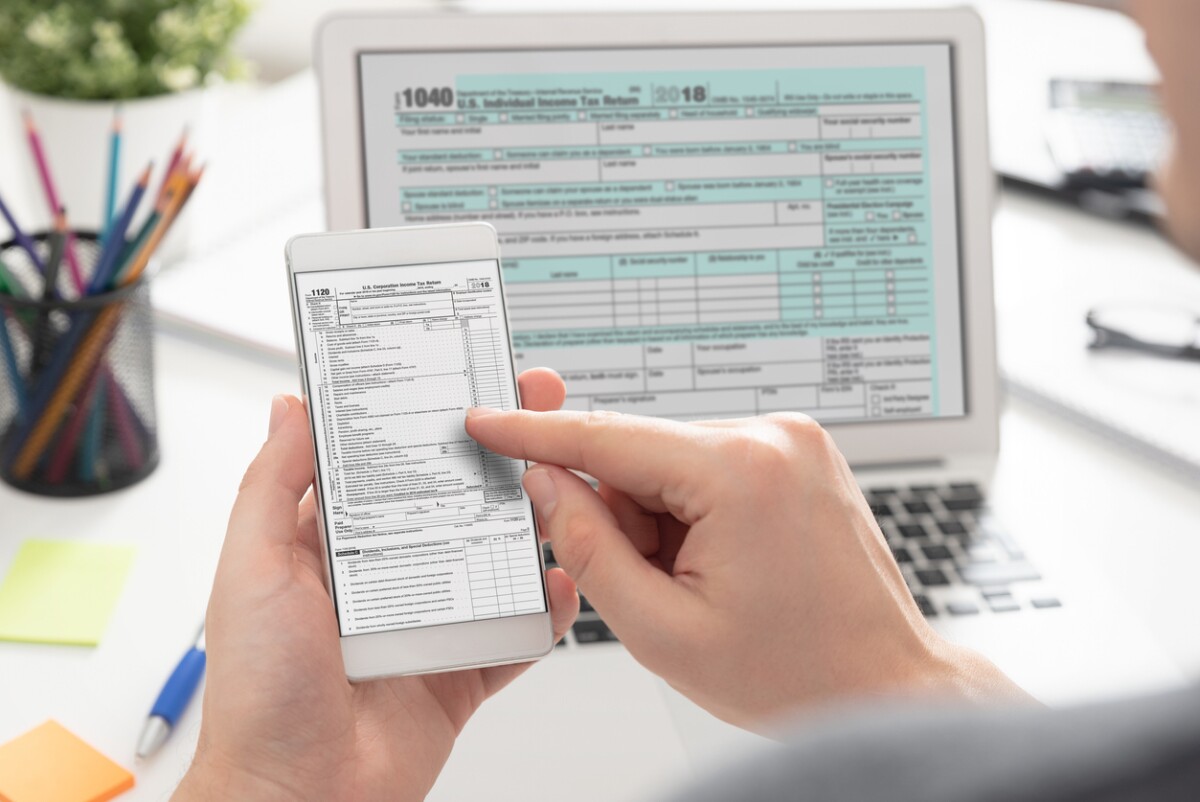

To make sure users are getting the largest possible tax refund, Intuit incorporates machine learning throughout the TurboTax experience to help users file their taxes more efficiently. TurboTax uses machine learning to shorten the filing process, which takes an average of 13 hours.

With Intuit’s computer vision capabilities supported by Amazon Textract, entering information from tax forms like W2s or 1099s takes seconds. Rather than a user having to enter form fields manually, the service scans pictures of the forms and digitizes them. Then, using contextual data from TurboTax’s existing database of tax codes and compliance forms, Amazon Textract verifies accuracy and identifies any anomalies or missing data for the user.

Healthcare

Machine learning plays a key role in many health-related realms - from providers and payers looking to expedite the care continuum to pharma and biotech researchers looking to reduce costs and speed up the drug discovery and disease detection process. Researchers at Duke Center for Autism and Brain Development are using machine learning to screen for autism spectrum disorder (ASD) in children. It’s critically important to diagnose ASD as early in a child’s development as possible — starting treatment for ASD at an age of 18 to 24 months can increase a child’s IQ by up to 17 points—in some cases moving them into the “average” child IQ range of 90-110 (or above it)—and, in turn, significantly improving their quality of life. Currently, the wait time for children to receive a diagnosis could be well after the child’s third birthday. By combining the power of machine learning and computer vision, powered by AWS, an interdisciplinary team of researchers at Duke University have created a faster, less expensive, more reliable, and more accessible system to screen children for ASD.

Media and entertainment

Computer vision technology is helping sports organizations like the National Football League (NFL) improve the game for fans. The NFL works with AWS to develop real-time, state-of-the-art cloud technology leveraging machine learning and artificial intelligence to increase the efficiency and pace of the game.

For example, deep learning and computer vision technologies are being explored to aid game officiating including real-time football tracking. Within days, AWS and NFL scientists were able to create custom training data sets of thousands of images extracted from NFL broadcast game footage using Amazon SageMaker Ground Truth.

Working with the Amazon ML Solutions Lab, Amazon SageMaker and GluonCV with MXNet were used to train and optimize several state-of-the-art deep learning-based object detection models such as Faster-RCNN and Yolov3, to accurately detect the football across video frames. This led to a first-of-its-kind football tracking model that performs well in a number of complex scenarios, such as when the ball is highly occluded or is partially visible in different camera angles.

The NFL also uses computer vision to more easily and quickly search through thousands of media assets. The NFL photo team, official photographers of the NFL, has millions of photos in archive and generates 500,000 photos each season. Manually, they were able to tag 50,000 images over 18 months. By using Amazon Rekognition custom face collection, text in image, object detection, and Custom Labels, an automated machine learning object detection service, they were able to apply detailed tags for players, teams, objects, action, jerseys, location, etc. to their entire photo collection in a fraction of time it took previously. This allowed them to make these photos searchable and usable to everyone in the company in ways that weren't possible before.

For Sportradar, the global provider of sports and intelligence for the betting and media industries providing data coverage from more than 200,000 events annually, advances in computer vision are an opportunity to expand the depth of sports data offered to customers and reduce the costs of data collection through automation.

Sportradar is investing in computer vision research both through internal development and external partnerships to build computer vision data collection capabilities with an initial focus on tennis, soccer and snooker. Working with the Amazon ML Solutions Lab, Sportradar is exploring the application of state-of-the-art deep learning models for automated match event detection in soccer, moving beyond player and ball localization to understanding the intent of the play in terms of what is happening in the game.

To bring this technology into production as it matures, Sportradar is leveraging AWS services including Amazon SageMaker, EKS, MSK, FSx and Amazon’s broad range of GPU and CPU compute instances for its computer vision processing pipeline. This infrastructure allows Sportradar's researchers to test and validate computer vision models at scale and bring models from the lab to production with minimal effort while delivering the low latency, reliability and scalability needed for live sports betting use cases.

You can find more ways that AWS customers are innovating with computer vision here. More information about Amazon's participation at CVPR is available here.