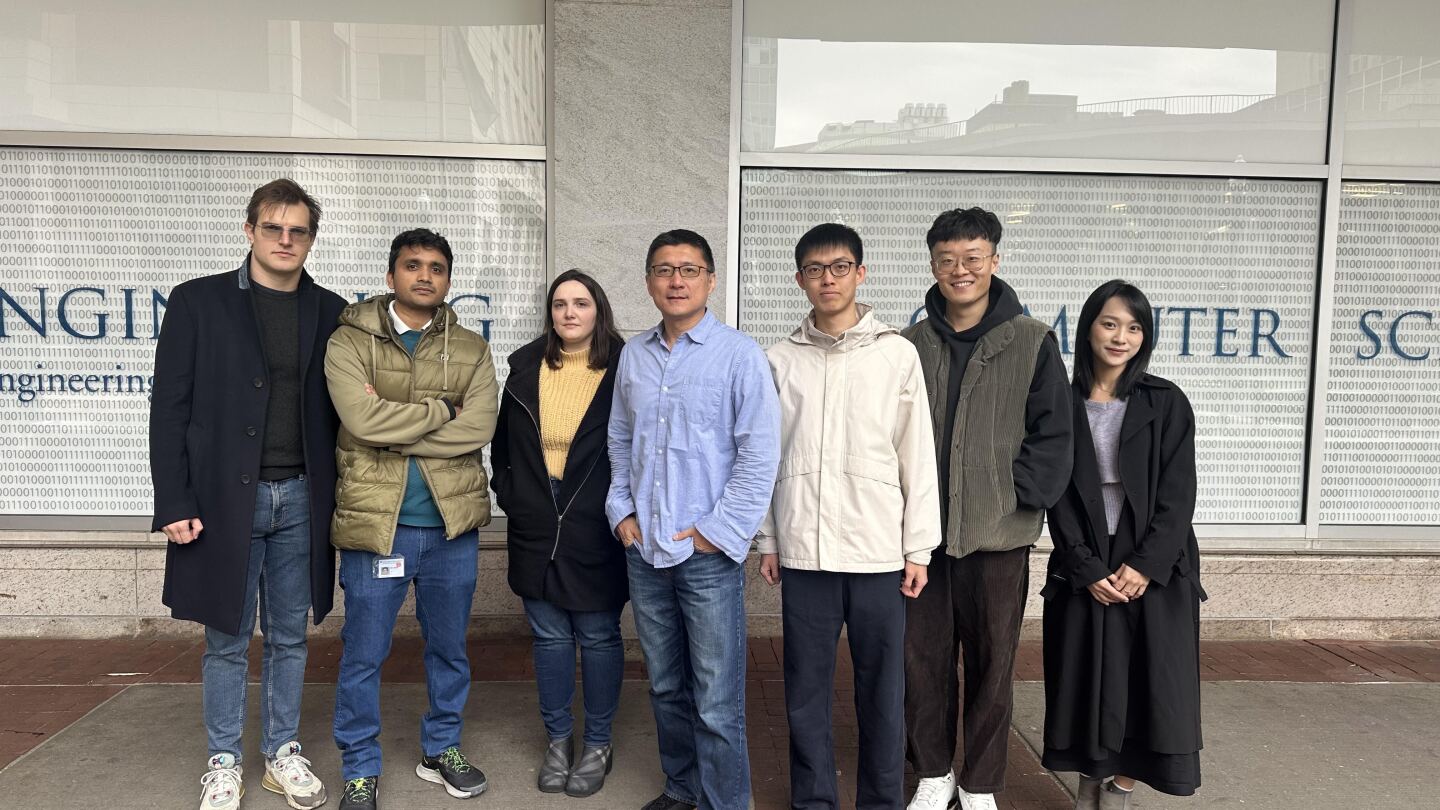

Yangruibo Ding - Team leader

Yangruibo Ding is a fifth-year PhD student in the Department of Computer Science at Columbia University. His research focuses on training language models to generate and understand source code. He is interested in modeling code-specific semantics to solve software engineering tasks. Recently, he is working on improving code LMs' reasoning capability to tackle complex coding tasks.

Jinjun Peng

Jinjun Peng is a second-year PhD student in the Department of Computer Science at Columbia University. His research focuses on enhancing AI models for software engineering in terms of both functionality and safety, including training code LLMs with better semantics and reasoning (NeurIPS’24) and generating secure code.

Alex Mathai

Alex Mathai is a second-year PhD student at Columbia University. His research interests span Large Language Models, Machine Learning and Software Engineering. Currently, his research deals with creating LLM Agents that aid in debugging issues in massive multi-million+ lines-of-code codebases like the Linux kernel. Previously, he worked as a Research Engineer at IBM Research for three years focusing on AI4Code.

Ira Ceka

Ira Ceka is a third-year PhD student at Columbia University, specializing in vulnerability detection. Her recent submission in causal deep learning for vulnerability detection has been accepted at ICSE. Additionally, she is interested in large language model (LLM) applications, with her current submission focused on exploring effective prompting techniques for CWE-based vulnerability detection.

Adam Storek

Adam Štorek, a second-year PhD student in Computer Science at Columbia University, works on software security, program analysis, and natural language processing, focusing on adversarial attacks on coding assistants. He presented at CCS 2024 and ACL 2023 and gained industry experience at Google and Moderna.

Yun-Yun Tsai

Yun-Yun Tsai is a fourth-year PhD candidate in the Department of Computer Science at Columbia University, advised by Professor Junfeng Yang. Her research focuses on Security in Artificial Intelligence. She is particularly interested in improving trustworthiness, security, and robustness over machine learning (ML) algorithms and computer systems.

Junfeng Yang - Faculty advisor

Yang’s research centers on building reliable, secure, and fast software systems. He has invented techniques and tools to analyze, test, debug, monitor, and optimize real-world software, including Android, Linux, production systems at Microsoft, machine learning systems, benefiting billions of users. His research has resulted in numerous vulnerability patches to real-world systems and press coverage at Scientific American.