-

EMNLP 2019 Workshop on DeepLo2019The performance of deep neural models can deteriorate substantially when there is a domain shift between training and test data. For example, the pre-trained BERT model can be easily fine-tuned with just one additional output layer to create a state-of-the-art model for a wide range of tasks. However, the fine-tuned BERT model suffers considerably at zero-shot when applied to a different domain. In this

-

EMNLP 20192019In this paper, we establish the effectiveness of using hard negatives, coupled with a siamese network and a suitable loss function, for the tasks of answer selection and answer triggering. We show that the choice of sampling strategy is key for achieving improved performance on these tasks. Evaluating on recent answer selection datasets -- InsuranceQA, SelQA, and an internal QA dataset, we show that using

-

EMNLP 20192019Diacritic restoration has gained importance with the growing need for machines to understand written texts. The task is typically modeled as a sequence labeling problem and currently Bidirectional Long Short Term Memory (BiLSTM) models provide state-of-the-art results. Recently, Bai et al. (2018) show the advantages of Temporal Convolutional Neural Networks (TCN) over Recurrent Neural Networks (RNN) for

-

SIGDIAL 20192019Dialog state tracking is used to estimate the current belief state of a dialog given all the preceding conversation. Machine reading comprehension, on the other hand, focuses on building systems that read passages of text and answer questions that require some understanding of passages. We formulate dialog state tracking as a reading comprehension task to answer the question what is the state of the current

-

Interspeech 20192019Building socialbots that can have deep, engaging open-domain conversations with humans is one of the grand challenges of artificial intelligence (AI). To this end, bots need to be able to leverage world knowledge spanning several domains effectively when conversing with humans who have their own world knowledge. Existing knowledge-grounded conversation datasets are primarily stylized with explicit roles

Related content

-

July 22, 2020Amazon scientists are seeing increases in accuracy from an approach that uses a new scalable embedding scheme.

-

July 22, 2020Dialogue simulator and conversations-first modeling architecture provide ability for customers to interact with Alexa in a natural and conversational manner.

-

July 08, 2020New method extends virtual adversarial training to sequence-labeling tasks, which assign different labels to different words of an input sentence.

-

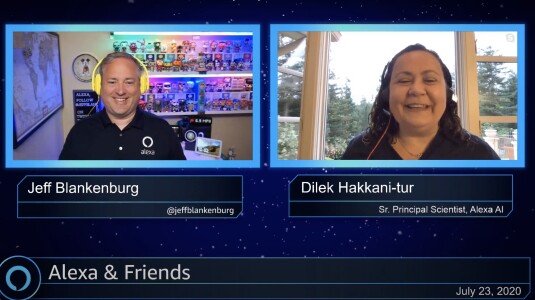

July 07, 2020Watch the replay of the live interview with Alexa evangelist Jeff Blankenburg.

-

July 06, 2020After nearly 40 years of research, the ACL 2020 keynote speaker sees big improvements coming in three key areas.

-

July 02, 2020Amazon researchers coauthor 17 conference papers, participate in seven workshops.