Ariadna Sanchez grew up steeped in the world of music performance and orchestra, learning to play violin at age 5 and setting her sights on a life in music. Today she’s a text-to-speech (TTS) research scientist at Amazon — and those early musical interests contributed to her career path.

Sanchez works in polyglot TTS, which involves researching voice models that can speak any language with a native accent. Ultimately, TTS is a mixed discipline — not just engineering or purely tech — and Sanchez says her musical background leads her to find novel solutions or look at problems in a unique way.

Linking music with tech

Growing up with a music-intensive education in Barcelona, Spain, Sanchez was thinking ahead to university by the time she was 15, and she wanted to find a degree program with a connection to music. She found one in the telecommunications engineering department at Barcelona’s Polytechnic University of Catalonia, where one of the branches was speech, music, and video processing. She was also intrigued by the program’s AI and machine learning offerings.

At the time, she was focused on music and how it could be applied to machine learning. One of her professors was working on creating a voice that could be modulated in different ways to sound more human, combining language and technological elements.

“That got me into the path of realizing ‘Oh, I actually really like this TTS side of things,’” she says. An internship at an acoustics consultancy also helped her realize that she wanted to do work where she could look for breakthroughs and “discover new things,” she says.

In her senior thesis, she combined those interests to develop an audio-based game. Especially attracted to well-written, story-based games, Sanchez says she plays all types of video games, which are a hobby and a passion for her.

“I tried to understand how acoustics from different environments can affect the player’s perception and how the player can enjoy and navigate through an audio-only game,” she says.

The path to TTS research

An internship at Telefónica, where her work involved machine learning that focused on text-based natural language processing, helped determine the next steps on her journey. After finishing her undergraduate degree, she pursued a master’s in speech and language processing at the University of Edinburgh in Scotland.

There she studied natural language understanding, human-computer interaction, text-to-speech, and automatic speech recognition.

“I found TTS to be more engaging, overall,” she says. “Speech is not only about what you say, but also how you say it, how the person speaking sounds, et cetera.”

Sanchez took it upon herself to learn more about the nuances of languages including English, Scottish Gaelic, and Japanese. She links her fascination with that subject to her longtime interest in a wide variety of music, from punk to classical to mainstream pop and fusion styles. Her TTS research also piqued her interest in learning about languages and how they differ from each other.

“I have always really liked melodic music with lyrics, which made me intrigued about the nuances of language, how lyrics are composed and semantics of language," she says. "It also made me really invested in learning languages to be able to understand the music I listen to."

When Amazon recruiters visited the University of Edinburgh as Sanchez was finishing her degree, they were looking for a language engineer fluent in Spanish and hired her as a language engineer intern.

That internship resulted in a full-time role at Amazon.

“My background is mostly on the engineering side, so I not only built more skills on the linguistics front during my internship, but I also learned a lot about how a team cooperates, and how important prioritization is to make a project successful.”

Many accents, one voice

Now, almost four years into her job as a research scientist, Sanchez focuses on providing a more uniform voice experience. In the past, new languages and accents on Echo devices have had distinct voices, such as American Spanish and European Spanish, which sound like two different people. The goal of Sanchez’s research is to design models that pronounce words from various languages with the correct local accent, but in the same voice, for continuity.

“If you have a multilingual household like mine, it's a bit weird to have different voices speaking different languages,” she noted. “But if you have the same person speaking all these different languages back to you, it sounds less jarring.” She and her team have already proven this can work by having the British English and American English masculine-sounding speakers now using the same voice.

Sanchez says that her work is also influenced by her reading about technology ethics, especially works by authors Cathy O'Neil and Caroline Criado Perez.

“Providing more voice options is important,” she says. “Having a bigger range of voices brings more variety and brings more validation to different communities.” To that end, her team works on developing polyglot voices that represent a wider range of voices and speaking styles.

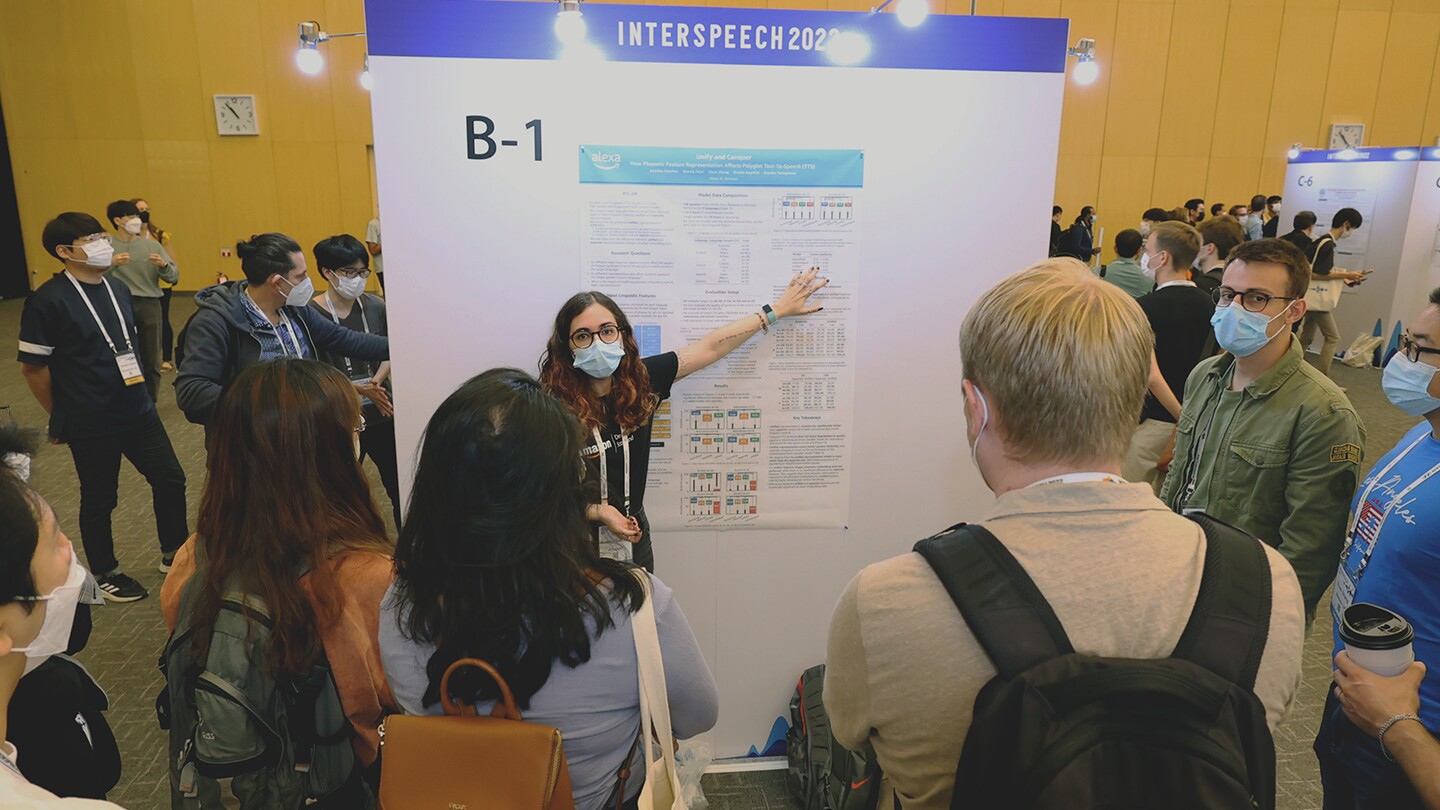

In September of this year, Sanchez presented “Unify and conquer: How phonetic feature representation affects polyglot text-to-speech (TTS)” at Interspeech 2022. The paper — one of three accepted to Interspeech — explored the two primary approaches to representing linguistic features within a multilingual model.

In the paper, Sanchez and her coauthors note that: “The main contribution of this paper lies in the experimentation and evaluation aimed at understanding how unified representations and separate representations of input linguistic features affect the quality of Polyglot synthesis in terms of naturalness and accent of the voice. To the best of our knowledge, this is the first work conducting a systematic study and evaluation on this subject.”

“When we were looking at our design choices for multispeaker multilingual models, we did not find any literature that compared different types of linguistic features thoroughly,” she says. “We decided to explore and write about two very distinct approaches to representing input linguistic features — unifying them based on phonetic knowledge, or separating all tokens that represent phonemes from different languages/accents. With this, we found that using a unified representation led to more natural and stable speech, while also having cleaner accent.”

And while this was an important step, Sanchez emphasized there are several more steps to take: “To move forward in the field, we need to improve the control we have on speech parameters, like pitch, intonation, tone, and timbre, in isolation.”

She and her team continue to work toward even more natural-sounding speech that is closer to the way people actually speak.

“We’re at a very exciting point of text-to-speech, where we are moving away from the old TTS systems that sounded robotic towards a more approachable and friendly voice," she says. "In the end, that is an important factor that allows our customers to have more engaging conversations with Alexa every day.”